Conceptual overview¶

Our goal is to enable AI-application developers and researchers with:

A set of pre-trained NLP models, pre-defined dialog system components (ML/DL/Rule-based), and pipeline templates;

A framework for implementing and testing their own dialog models;

Tools for application integration with adjacent infrastructure (messengers, helpdesk software, etc.);

Benchmarking environments for conversational models and uniform access to relevant datasets.

Key Concepts¶

A

Modelis any NLP model that doesn’t necessarily communicates with the user in natural language.A

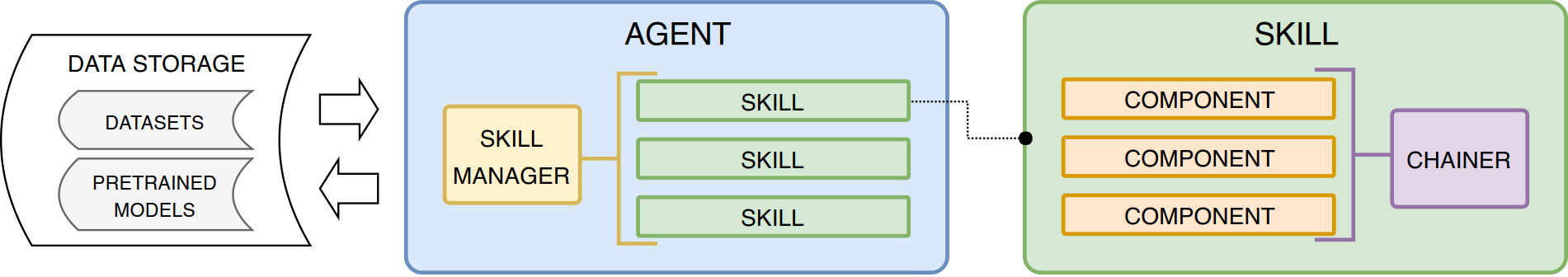

Componentis a reusable functional part of aModel.Rule-based Modelscannot be trained.Machine Learning Modelscan be trained only stand alone.Deep Learning Modelscan be trained independently and in an end-to-end mode being joined in a chain.A

Chainerbuilds a model pipeline from heterogeneous components (Rule-based/ML/DL). It allows one to train and infer models in a pipeline as a whole.

The smallest building block of the library is a Component.

A Component stands for any kind of function in an NLP pipeline. It can

be implemented as a neural network, a non-neural ML model, or a

rule-based system.

Components can be joined into a Model. A Model

solves a larger NLP task than a Component. However, in terms of

implementation, Models are not different from Components.

Most of DeepPavlov models are built on top of PyTorch. Other external libraries can be used to build basic components.